Sensation Suit

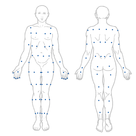

I’ve sewn 90 WiFi controlled vibration motors into a full body suit. Here’s why and how.

But..why?

Virtual environments are getting more and more realistic. The latest most prominent advance were consumer head mounted displays like the Oculus Rift. However, most improvements have been made with visual and audible output, little has been done to improve the haptic feedback from the virtual world to the human body. But it’s so promising!

Interesting experiments have been conducted in recent years, most famously the Rubber Hand Illusion. Subsequently the Swedish professor Henrik Ehrsson has shown, that what’s possible for single body parts is also possible as Body Transfer Illusion. You can literally shake hands with yourself without noticing it’s actually you. Check out his experiments, they’re really mind blowing.

All this left me convinced that what’s possible in the real world should also be possible with VR as Avatar Transfer Illusion: make people believe the avatar they’re seeing is their own body. A few experiments have been done in this direction and the results are promising.

According to Ehrsson three conditions have to be satisfied for a transfer illusion to be possible

- The usage of a sufficiently humanoid body

- The adoption of a first person visual perspective of the body

- A continuous match between visual and somatosensory information about the state of the body

The first two are pretty easy to meet in virtual environments. I set out to get a step closer to be able to see what happens if you meet all three.

Hardware

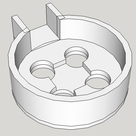

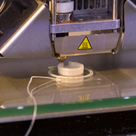

I used 88 10mm coin vibration motors from Precision Microdrives (thanks again guys - you are awesome!) They were sewn into elastic sporting cloths, each one held in place by a custom modelled and 3D printed mount.

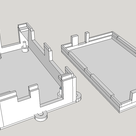

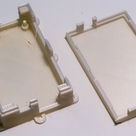

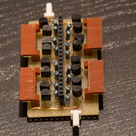

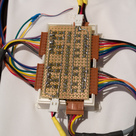

Six TLC5947 chips drive up to 16 motors each. Because the motors draw more current than the chip can handle, I’ve built six boards with 16 simple amplifier circuits. Stacked on top of each other they are put into custom cases which are also sewn onto the cloths.

The chips are all connected to an I2C bus. So is a Raspberry Pi which acts as central controller. It sits in a small pouch above the left hip.

Everything is powered by a modded ATX power supply.

Software

The server software running on the Raspberry Pi is written in Python and based around the new asyncio library. I’ve chosen Google Protocol Buffers for the network communication. The source code is available on GitHub.

Unfortunately the pure Python implementation of Protobuf is painfully slow on the Raspberry. I’ve profiled and optimized a lot but didn’t manage to get above 350 messages per second which means a mere 4 messages per motor per second. These messages are handled in a median time of 90 ms.

I also developed a Unity Plugin as reference client implementation. It’s on GitHub, too.

Usage

I’d have loved to find out, if an Avatar Transfer Illusion is possible, what factors contribute to it and what needs to be improved. But simply time was up and I had to hand in my thesis.

However I pimped the Unity Angry Bots project to demonstrate how everything comes together. I changed the perspective to first person, added Oculus Rift support, Wiimote controls and, last but not least, the sensation plugin. You feel the distance to obstacles to your side or behind you, a radar lets you sense the direction and distance of enemies, your lower arms vibrate while you fire your weapon and different zones vibrate depending on where you’ve been hit.

Next up

The list what should be improved or could be done next is long. The prominent items are:

- Find cloths that are easier to put on

- Design a custom board with SMD components to shrink the worn hardware

- Speed up Python + Protocol Buffers

- The C++ backed implementation should bring some improvements. I will try them over easter (if they are released for Python 3.4 by then)

- The faster Raspberry Pi 2 with a quad core could help

- Experiment with different actors - I’d love to test the potential of electronic muscle stimulation!

And, last but not least, study what’s neccessary to induce an Avatar Transfer Illusion. After all that’s why I built the thing.

What do you think? Just leave any questions or comments below - I’m happy to elaborate!